Sensor fusion integrates data from multiple sensors, enhancing the accuracy and reliability of robotic perception compared to relying on a single sensor. This approach mitigates individual sensor limitations such as noise, occlusion, and environmental sensitivity, leading to more robust decision-making. By combining diverse sensor inputs, robots achieve improved spatial awareness and obstacle detection in complex environments.

Table of Comparison

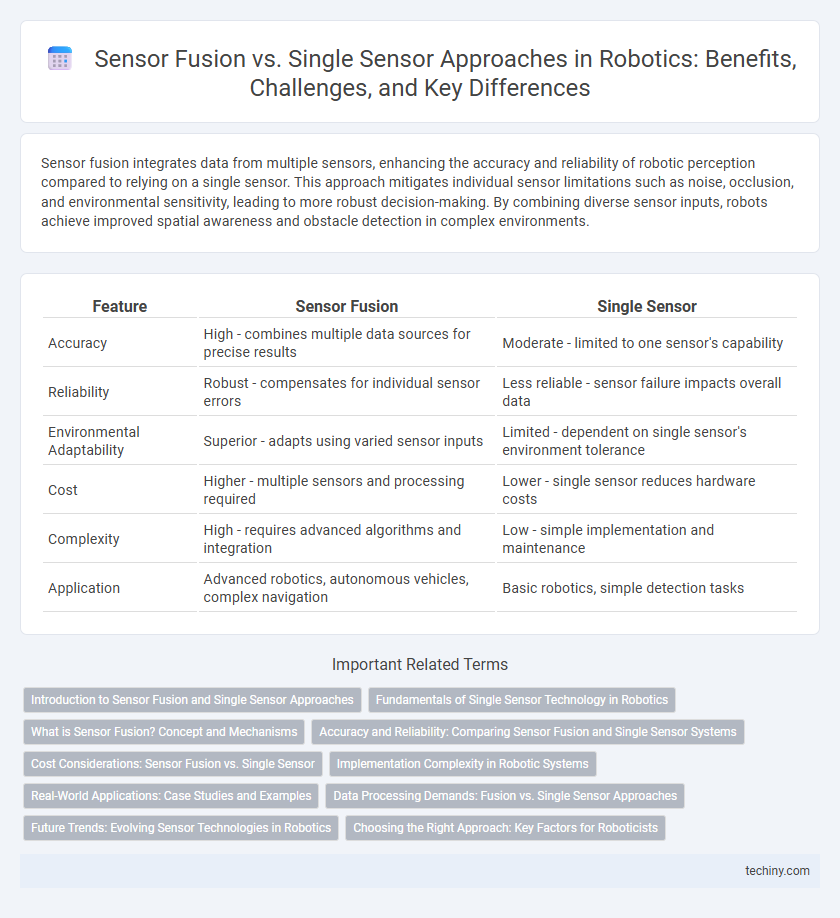

| Feature | Sensor Fusion | Single Sensor |

|---|---|---|

| Accuracy | High - combines multiple data sources for precise results | Moderate - limited to one sensor's capability |

| Reliability | Robust - compensates for individual sensor errors | Less reliable - sensor failure impacts overall data |

| Environmental Adaptability | Superior - adapts using varied sensor inputs | Limited - dependent on single sensor's environment tolerance |

| Cost | Higher - multiple sensors and processing required | Lower - single sensor reduces hardware costs |

| Complexity | High - requires advanced algorithms and integration | Low - simple implementation and maintenance |

| Application | Advanced robotics, autonomous vehicles, complex navigation | Basic robotics, simple detection tasks |

Introduction to Sensor Fusion and Single Sensor Approaches

Sensor fusion integrates data from multiple sensors to enhance accuracy, reliability, and environmental understanding in robotics, overcoming limitations inherent in single sensor approaches such as noise and partial information. Single sensors provide specific, isolated data points, which can lead to decreased performance in complex or dynamic environments due to their constrained perception capabilities. Utilizing sensor fusion enables robots to achieve more robust decision-making and improved situational awareness by combining complementary sensor inputs like LiDAR, cameras, and IMUs.

Fundamentals of Single Sensor Technology in Robotics

Single sensor technology in robotics relies on individual sensory inputs such as LiDAR, cameras, or ultrasonic sensors to perceive the environment, providing specific data like distance, visual imagery, or obstacle detection. These sensors have fundamental constraints including limited field of view, susceptibility to noise, and reduced reliability under varying environmental conditions. Understanding the strengths and limitations of single sensor types is crucial for optimizing robotic perception and informing decisions about integrating multi-sensor fusion systems.

What is Sensor Fusion? Concept and Mechanisms

Sensor fusion integrates data from multiple sensors to create a more accurate, reliable, and comprehensive understanding of the environment than single sensors can provide. It uses algorithms like Kalman filters, Bayesian networks, and neural networks to combine inputs, reduce noise, and overcome individual sensor limitations. This process enhances robotic perception, enabling better decision-making and improved autonomy in navigation, mapping, and object recognition tasks.

Accuracy and Reliability: Comparing Sensor Fusion and Single Sensor Systems

Sensor fusion enhances accuracy by combining data from multiple sensors, reducing individual sensor errors and providing a more comprehensive environmental understanding. Single sensor systems often suffer from limitations such as noise, occlusion, or failure, which compromise reliability and precision. Integrating diverse sensor inputs through fusion algorithms significantly boosts robustness and consistency in robotic perception and decision-making tasks.

Cost Considerations: Sensor Fusion vs. Single Sensor

Sensor fusion enhances robotic system accuracy and reliability by integrating data from multiple sensors, reducing the likelihood of errors despite higher initial costs compared to a single sensor setup. Single sensors offer a lower upfront expense but may incur greater long-term costs due to potential failures and less comprehensive data capture. Investing in sensor fusion technology ultimately provides better cost efficiency through improved performance, reduced maintenance, and extended system lifespan.

Implementation Complexity in Robotic Systems

Sensor fusion in robotic systems significantly increases implementation complexity due to the need for integrating and synchronizing data from multiple heterogeneous sensors, requiring advanced algorithms such as Kalman filters or deep learning models for accurate interpretation. Single sensor setups offer simpler implementation with lower computational demands, but often at the expense of reduced reliability and environmental perception accuracy. The trade-off between implementation complexity and system robustness is critical when designing sensor architectures for autonomous robots and industrial automation.

Real-World Applications: Case Studies and Examples

Sensor fusion enhances robotic perception by integrating data from multiple sensors such as LiDAR, cameras, and IMUs, resulting in higher accuracy and reliability demonstrated in autonomous vehicles and industrial robots. Single sensors often face limitations like occlusion or environmental interference, whereas sensor fusion mitigates these issues by providing complementary information, evidenced in drones navigating complex environments and robotic arms performing precision tasks. Case studies in warehouse automation reveal sensor fusion improves object detection and localization, leading to increased efficiency and safety compared to single-sensor systems.

Data Processing Demands: Fusion vs. Single Sensor Approaches

Sensor fusion integrates data from multiple sensors, requiring advanced algorithms and increased computational power for real-time processing, while single sensor systems demand less processing but risk higher error rates and lower reliability. Fusion approaches enhance accuracy and robustness by combining complementary information, yet they impose greater data handling and synchronization challenges on the system's processor. Balancing processing demands with system capabilities is crucial for optimizing performance in robotics applications.

Future Trends: Evolving Sensor Technologies in Robotics

Future trends in robotics emphasize the integration of advanced sensor fusion techniques that combine data from LiDAR, RGB-D cameras, and inertial measurement units (IMUs) to enhance environmental perception and decision-making accuracy. Emerging technologies leverage machine learning algorithms to optimize multi-sensor data interpretation, enabling robots to perform complex tasks in dynamic, unstructured environments with higher reliability than single-sensor systems. Ongoing innovations in low-power, high-resolution sensors and edge computing are expected to further propel sensor fusion capabilities, driving smarter, more autonomous robotic applications across industries.

Choosing the Right Approach: Key Factors for Roboticists

Sensor fusion enhances robotic perception by integrating data from multiple sensors, improving accuracy and reliability over single sensor systems. Key factors for roboticists in choosing the right approach include task complexity, environmental conditions, and computational resources available for real-time processing. Balancing sensor costs with performance requirements ensures optimized design tailored to specific robotic applications.

Sensor fusion vs single sensor Infographic

techiny.com

techiny.com