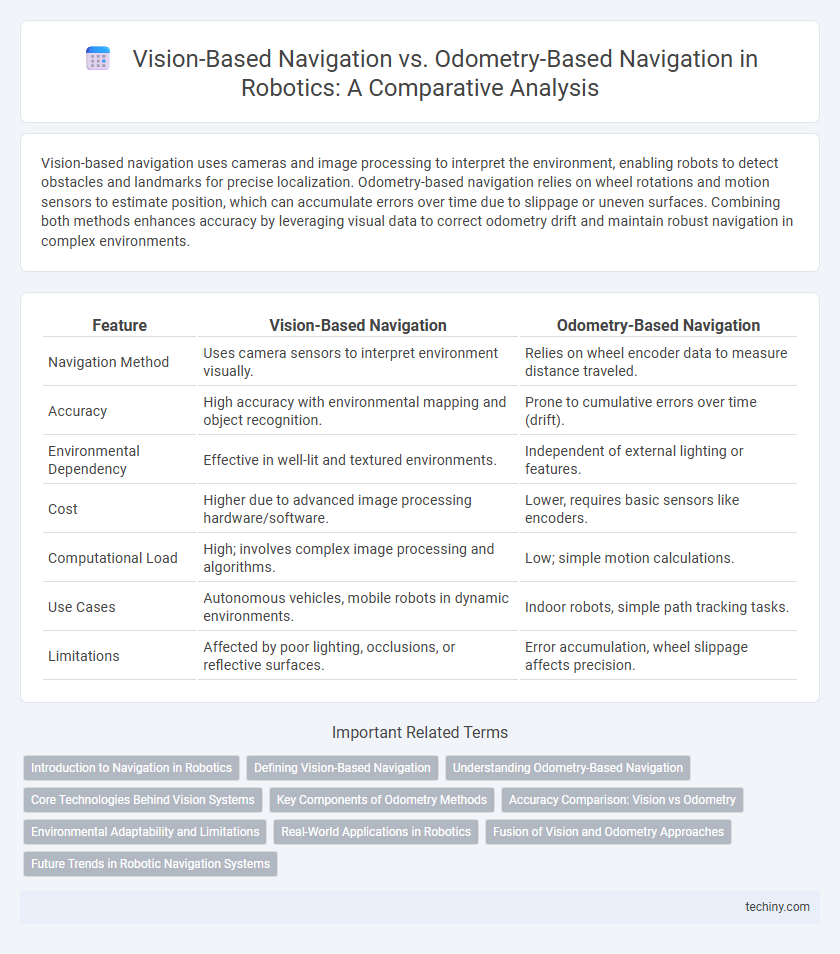

Vision-based navigation uses cameras and image processing to interpret the environment, enabling robots to detect obstacles and landmarks for precise localization. Odometry-based navigation relies on wheel rotations and motion sensors to estimate position, which can accumulate errors over time due to slippage or uneven surfaces. Combining both methods enhances accuracy by leveraging visual data to correct odometry drift and maintain robust navigation in complex environments.

Table of Comparison

| Feature | Vision-Based Navigation | Odometry-Based Navigation |

|---|---|---|

| Navigation Method | Uses camera sensors to interpret environment visually. | Relies on wheel encoder data to measure distance traveled. |

| Accuracy | High accuracy with environmental mapping and object recognition. | Prone to cumulative errors over time (drift). |

| Environmental Dependency | Effective in well-lit and textured environments. | Independent of external lighting or features. |

| Cost | Higher due to advanced image processing hardware/software. | Lower, requires basic sensors like encoders. |

| Computational Load | High; involves complex image processing and algorithms. | Low; simple motion calculations. |

| Use Cases | Autonomous vehicles, mobile robots in dynamic environments. | Indoor robots, simple path tracking tasks. |

| Limitations | Affected by poor lighting, occlusions, or reflective surfaces. | Error accumulation, wheel slippage affects precision. |

Introduction to Navigation in Robotics

Vision-based navigation leverages cameras and image processing algorithms to interpret the environment, enabling robots to identify landmarks and obstacles for precise localization and path planning. Odometry-based navigation relies on wheel encoders and inertial measurement units (IMUs) to estimate a robot's position by tracking its movement over time, but it is prone to cumulative errors and drift. Combining both methods often enhances navigation accuracy by compensating for odometry's drift with visual feedback, creating robust autonomous systems in robotics.

Defining Vision-Based Navigation

Vision-based navigation relies on cameras and image processing algorithms to interpret the robot's surroundings, enabling obstacle detection and path planning through visual data. This method uses features like edges, landmarks, and textures to localize the robot within an environment, enhancing accuracy compared to odometry-based navigation that depends on wheel encoders and inertial sensors. Vision-based systems excel in dynamic and complex environments where odometry errors accumulate, making them crucial for robots requiring high-precision movement and adaptability.

Understanding Odometry-Based Navigation

Odometry-based navigation relies on data from wheel encoders and inertial measurement units (IMUs) to estimate a robot's position by calculating relative movement over time. This method is advantageous in environments where visual cues are limited or unreliable, such as in low-light or featureless spaces. However, odometry is susceptible to cumulative errors due to wheel slippage and sensor noise, necessitating periodic recalibration or integration with other navigation techniques for improved accuracy.

Core Technologies Behind Vision Systems

Vision-based navigation leverages advanced computer vision algorithms, such as simultaneous localization and mapping (SLAM) and deep learning-based image recognition, to interpret and understand complex environments. High-resolution cameras, LiDAR sensors, and stereo vision systems provide rich, multi-dimensional data essential for accurate object detection and obstacle avoidance. These core technologies enable robots to adapt more dynamically to changing surroundings compared to odometry-based navigation, which primarily relies on wheel encoder data and inertial measurements prone to cumulative errors.

Key Components of Odometry Methods

Odometry-based navigation relies heavily on key components such as wheel encoders, inertial measurement units (IMUs), and motor feedback systems to estimate a robot's position by measuring wheel rotations and changes in orientation. These sensors provide continuous motion data crucial for dead reckoning, allowing robots to track movement relative to a starting point without external references. Challenges include cumulative error and slip, which require sensor fusion and periodic calibration to maintain positional accuracy during autonomous navigation.

Accuracy Comparison: Vision vs Odometry

Vision-based navigation offers higher accuracy by continuously analyzing visual landmarks and environmental features, reducing cumulative errors typical in odometry-based systems. Odometry relies on wheel rotations and inertial measurements, which are prone to drift and slip, resulting in lower positional precision over time. Integrating vision with odometry can enhance overall navigation accuracy by combining spatial awareness with motion data.

Environmental Adaptability and Limitations

Vision-based navigation enables robots to adapt dynamically to complex or changing environments by interpreting visual data for obstacle detection and path planning, though it struggles under poor lighting or visually cluttered conditions. Odometry-based navigation offers reliable short-term positioning by measuring wheel rotations and inertial data but accumulates errors over time and fails to compensate for environmental changes without external corrections. Combining both methods often enhances navigational accuracy and adaptability, leveraging vision for environmental awareness and odometry for motion estimation.

Real-World Applications in Robotics

Vision-based navigation leverages cameras and image processing algorithms to enable robots to interpret their environment, providing robust obstacle detection and localization in dynamic, unstructured settings such as warehouses and autonomous vehicles. Odometry-based navigation relies on wheel encoders or inertial sensors to estimate position through motion tracking, offering high precision in controlled or repetitive tasks like industrial automation and mobile robots operating on flat surfaces. Combining vision-based systems with odometry enhances accuracy and reliability in real-world applications, addressing issues like sensor drift and environmental variability.

Fusion of Vision and Odometry Approaches

Fusion of vision-based navigation and odometry-based navigation enhances robotic localization accuracy by combining visual data with wheel encoder measurements. Vision sensors provide rich environmental information, correcting drift in odometry caused by wheel slippage or uneven terrain. Integrating these modalities through algorithms like Extended Kalman Filters or SLAM frameworks results in robust, real-time navigation performance for autonomous robots.

Future Trends in Robotic Navigation Systems

Future trends in robotic navigation systems emphasize the integration of vision-based navigation with advanced sensor fusion techniques to enhance environmental understanding and decision-making accuracy. Research on AI-driven visual SLAM (Simultaneous Localization and Mapping) algorithms is accelerating, enabling robots to operate reliably in dynamic and unstructured environments. Continuous improvements in odometry sensors combined with machine learning models aim to reduce drift and error accumulation, fostering more robust and autonomous navigation capabilities.

Vision-based navigation vs Odometry-based navigation Infographic

techiny.com

techiny.com