Simultaneous Localization and Mapping (SLAM) enables robots to build a map of an unknown environment while tracking their position within it. Localization focuses solely on determining the robot's location using existing maps or landmarks. SLAM integrates both localization and mapping processes, making it essential for autonomous navigation in dynamic and unstructured environments.

Table of Comparison

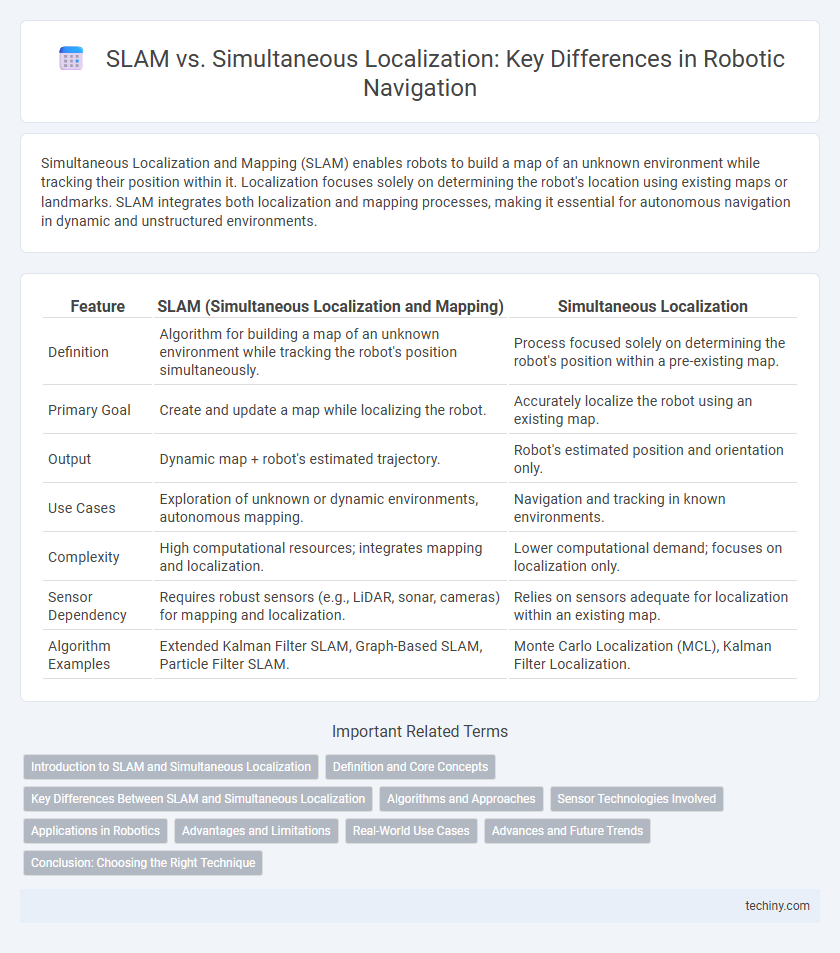

| Feature | SLAM (Simultaneous Localization and Mapping) | Simultaneous Localization |

|---|---|---|

| Definition | Algorithm for building a map of an unknown environment while tracking the robot's position simultaneously. | Process focused solely on determining the robot's position within a pre-existing map. |

| Primary Goal | Create and update a map while localizing the robot. | Accurately localize the robot using an existing map. |

| Output | Dynamic map + robot's estimated trajectory. | Robot's estimated position and orientation only. |

| Use Cases | Exploration of unknown or dynamic environments, autonomous mapping. | Navigation and tracking in known environments. |

| Complexity | High computational resources; integrates mapping and localization. | Lower computational demand; focuses on localization only. |

| Sensor Dependency | Requires robust sensors (e.g., LiDAR, sonar, cameras) for mapping and localization. | Relies on sensors adequate for localization within an existing map. |

| Algorithm Examples | Extended Kalman Filter SLAM, Graph-Based SLAM, Particle Filter SLAM. | Monte Carlo Localization (MCL), Kalman Filter Localization. |

Introduction to SLAM and Simultaneous Localization

Simultaneous Localization and Mapping (SLAM) is a critical robotics technique enabling robots to build a map of an unknown environment while tracking their own position simultaneously. SLAM combines sensor data, such as LiDAR or cameras, with algorithms to generate accurate real-time maps and localize the robot within them. Unlike standalone localization, SLAM addresses the dual challenge of mapping uncharted spaces and refining the robot's trajectory for precise navigation.

Definition and Core Concepts

SLAM (Simultaneous Localization and Mapping) involves building a map of an unknown environment while simultaneously determining the robot's position within it, using sensor data such as LiDAR, cameras, or IMUs. Simultaneous localization specifically refers to the process of identifying the robot's location relative to a known map or environment without the mapping component. Core concepts of SLAM include data association, state estimation, and loop closure, which work together to reduce uncertainty and improve accuracy in dynamic and complex environments.

Key Differences Between SLAM and Simultaneous Localization

SLAM (Simultaneous Localization and Mapping) involves building a map of an unknown environment while simultaneously keeping track of the robot's location within it, combining mapping and localization tasks. In contrast, Simultaneous Localization focuses solely on determining the robot's position using pre-existing maps or known landmarks without constructing a new map. Key differences include SLAM's dual functionality in unknown terrains versus Simultaneous Localization's reliance on existing spatial data for position estimation.

Algorithms and Approaches

SLAM (Simultaneous Localization and Mapping) algorithms integrate sensor data to construct or update a map while tracking a robot's location in unknown environments, leveraging probabilistic methods such as Extended Kalman Filters, Particle Filters, and graph-based optimization. Approaches to SLAM include feature-based and direct methods, with recent advancements focusing on deep learning techniques to enhance accuracy and robustness. Simultaneous localization emphasizes precise pose estimation using sensor fusion from LiDAR, cameras, and IMUs, often relying on real-time optimization algorithms like factor graphs and bundle adjustment for dynamic environments.

Sensor Technologies Involved

SLAM (Simultaneous Localization and Mapping) integrates data from diverse sensor technologies such as LiDAR, cameras, and IMUs to create detailed environmental maps while tracking the robot's position in real-time. Simultaneous localization alone primarily relies on sensor fusion from odometry, GPS, and IMUs to accurately determine the robot's position without necessarily mapping the surroundings. Advanced sensor technologies in SLAM allow for enhanced environmental perception and navigation in complex, dynamic environments compared to localization systems focused solely on position estimation.

Applications in Robotics

Simultaneous Localization and Mapping (SLAM) enables robots to build and update maps of unknown environments while simultaneously tracking their position, essential for autonomous navigation in dynamic and unstructured settings. SLAM algorithms enhance the performance of indoor service robots, drone navigation, and autonomous vehicles by providing real-time environmental awareness and obstacle avoidance. These applications demonstrate the critical role of SLAM in achieving efficient, precise, and reliable robotic localization and environmental mapping.

Advantages and Limitations

Simultaneous Localization and Mapping (SLAM) enables robots to build a map of an unknown environment while concurrently tracking their position, offering enhanced navigation accuracy in complex or dynamic settings. SLAM excels in GPS-denied environments, making it ideal for indoor robotics, autonomous vehicles, and drone applications, but it requires significant computational power and can struggle with feature-poor or highly dynamic scenes. Limitations include sensitivity to sensor noise and drift, which may lead to localization errors or map inaccuracies if the algorithm lacks robust sensor fusion and loop closure techniques.

Real-World Use Cases

SLAM (Simultaneous Localization and Mapping) enables robots to create accurate maps of unknown environments while tracking their position in real-time, essential for autonomous navigation in warehouses and self-driving cars. Simultaneous localization relies on sensor fusion techniques using LiDAR, cameras, and IMUs to achieve precise robot positioning in dynamic settings like urban roads and industrial sites. Real-world use cases demonstrate SLAM's effectiveness in drone delivery systems, robotic vacuum cleaners, and augmented reality applications, where environmental awareness and adaptability are critical for performance.

Advances and Future Trends

Advances in SLAM (Simultaneous Localization and Mapping) leverage deep learning and sensor fusion to enhance accuracy and robustness in complex environments. Emerging trends emphasize real-time processing with lightweight algorithms suitable for drones and autonomous vehicles. Future developments target fully autonomous systems with improved adaptability to dynamic, unstructured settings using AI-driven semantic mapping.

Conclusion: Choosing the Right Technique

Selecting the appropriate technique between SLAM and simultaneous localization depends on the specific robotic application and environmental complexity. SLAM provides comprehensive mapping and localization in unknown environments, making it ideal for dynamic or unstructured settings. In contrast, pure simultaneous localization suits applications with pre-existing maps where fast and efficient position estimation is paramount.

SLAM vs Simultaneous localization Infographic

techiny.com

techiny.com